Table of Contents

Introduction

We examined the differences in word score agreement for three scoring methods of curriculum-based measurement of oral reading fluency (CBM-R). The three scoring methods were: (1) Traditional - the real-time human scores, comparable to traditional CBM-R assessments in schools; (2) ASR - automatic speech recogntion scores; and (3) Recording - the criterion measure, where recorded audio files were scored by human assessors in a private space wearing headsets (with the ability to rewind, replay, and adjust audio). We also explored the effect of passage length using: (1) easyCBM passages as traditional CBM-R passages of about 250 words read for 60 seconds; and CORE passages read in their entirety that were (2) long, about 85 words, (3) medium, about 50 words, and (4) short, about 25 words.

This is the first study to compare scores by expert assessors to both ASR and traditional CMB-R scores consistent with those conducted in schools. These comparisons allowed for the analysis of the potential net gain of ASR compared to current school practices (as opposed to scores based on audio recordings), which we speculate is a more useful metric for educators, administrators, school district officials, and stakeholders. These results are part of our larger Content & Convergent Evidence Study. For details about the Content & Convergent Evidence Study procedures, including information on the sample, CBM-R passages, administration, and scoring methods, go here.

Passage-level results of words correct per minute (WCPM) scores for comparisons of scoring methods can be found here, and results comparing passage lengths can be found here.

Summary

We found, on average, very high word-score agreement rates between the Recording criterion and Traditional scores (.97 to .99), as well as high word score agreement rates between the Recording criterion and ASR scores (.73 to .94). Although the human-to-human agreement rates were exceedingly high and had much less variance than the human-to-machine agreement rates, in general, the ASR performed well compared to the criterion word scores.

If, according to Zechner et al. (2012)@zechner2012, the “known” ASR word accuracy rate for students is .71 to .85, then all but one of the ASR & Recording average agreement rates exceeded the highest end of the ASR word accuracy rate for students.

Given these results, stakeholders and educators can weigh the balance between high accuracy of human assessors and the resource demands of one-to-one CBM-R administration. That is, although there is some loss in accuracy of CBM-R word scores with an ASR system, gains stand to be made in terms of time and human resources in administration, as an entire classroom can be assessed and scored simultaneously under the supervision of one educator.

In addition, the loss in word accuracy is less conspicuous when looking at WCPM scores, as demonstrated by the results reported here.

Even more, this trade-off in accuracy and resources should only level in time as technology advances and ASR engines become more accurate.

Analysis

We calculated the percent of words read in a passage that were scored in agreement (as correct or incorrect) between the Recording criterion and the ASR or Traditional scores.

Results

The table below shows the mean (SD) agreement rates between ASR & Recording and Traditional & Recording aggregated by grade and passage length.

| ASR & Recording | Traditional & Recording | |||

|---|---|---|---|---|

| Mean | (SD) | Mean | (SD) | |

| Grade 2 | ||||

| short | 0.89 | (0.13) | 0.97 | (0.05) |

| medium | 0.89 | (0.13) | 0.98 | (0.04) |

| long | 0.89 | (0.14) | 0.98 | (0.04) |

| easyCBM | 0.73 | (0.20) | 0.97 | (0.03) |

| Grade 3 | ||||

| short | 0.93 | (0.09) | 0.98 | (0.04) |

| medium | 0.93 | (0.09) | 0.98 | (0.03) |

| long | 0.92 | (0.11) | 0.98 | (0.03) |

| easyCBM | 0.85 | (0.14) | 0.97 | (0.04) |

| Grade 4 | ||||

| short | 0.94 | (0.08) | 0.98 | (0.03) |

| medium | 0.94 | (0.08) | 0.99 | (0.02) |

| long | 0.93 | (0.09) | 0.98 | (0.02) |

| easyCBM | 0.85 | (0.15) | 0.97 | (0.07) |

In general, the average agreement rates between Traditional & Recording were exceptionally high, ranging from .97 to .99, and the average agreement rates between ASR & Recording were also high, ranging from .73 to .94 - all but one were above .85. The SDs for the Traditional & Recording agreement rates (.02 to .07) were two to seven times smaller than those for ASR & Recording (.08 to .20), indicating much less variance in the former.

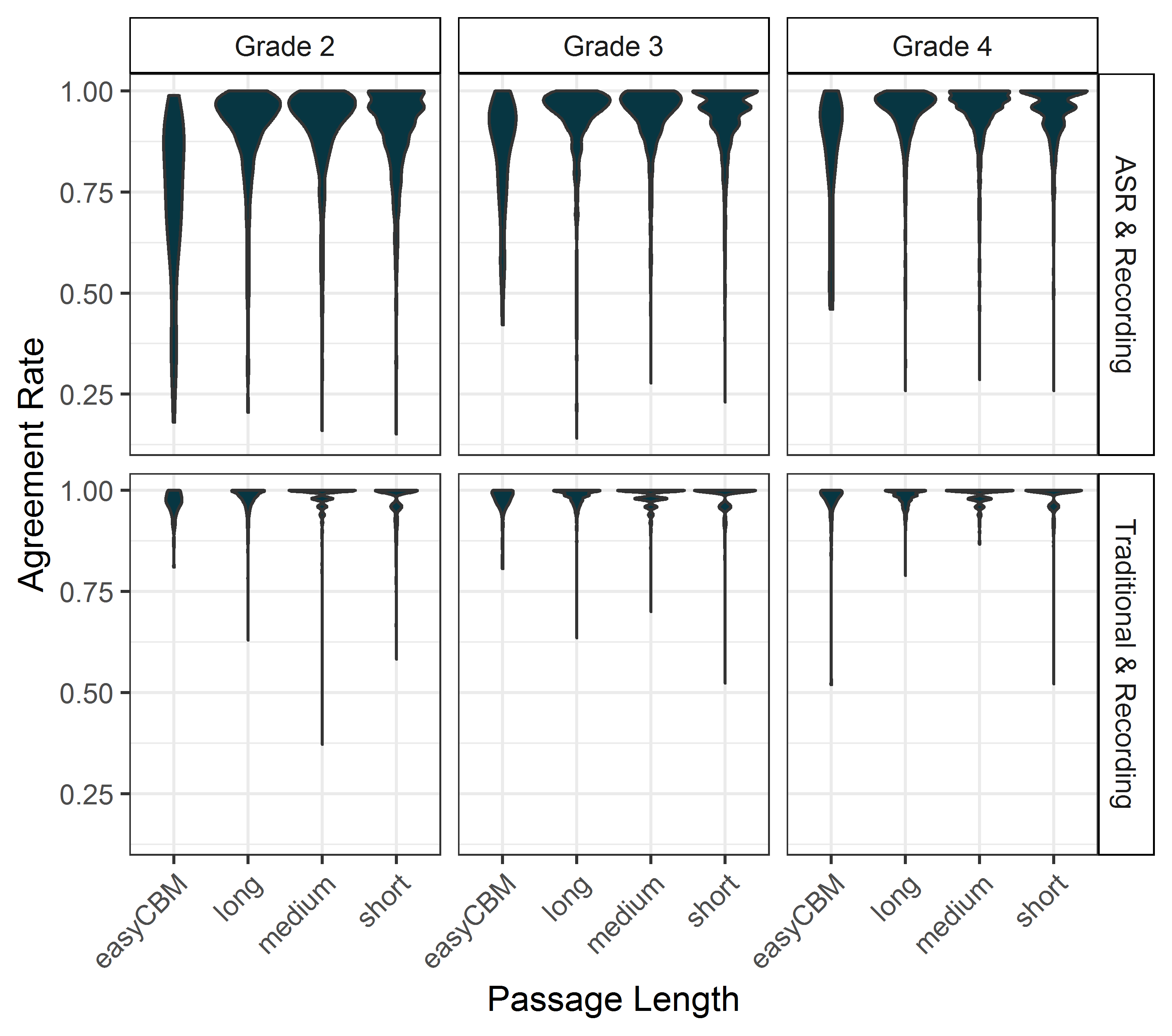

The figure below shows the violin plot distributions of passage-level agreement rate by grade and passage length, separately for ASR & Recording and Traditional & Recording. The interquartile ranges for the Traditional & Recording agreement rates are much smaller and generally set near 1.0, whereas those interquartile ranges are larger for ASR & Recording and set near .85 or above. The ASR-Recording agreement rates are poorest for the easyCBM passages.

Acknowledgments

The research reported here was supported by the Institute of Education Sciences, U.S. Department of Education, through Grant R305A140203 to the University of Oregon. The opinions expressed are those of the authors and do not represent views of the Institute or the U.S. Department of Education.